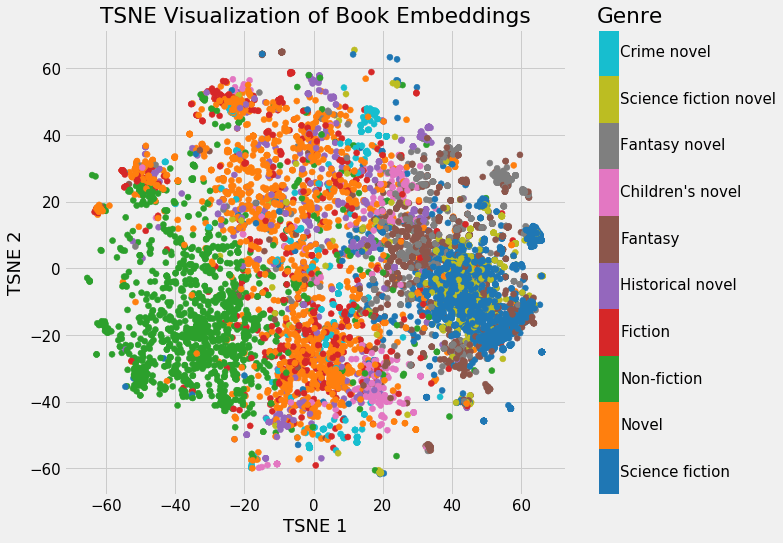

Word Embedding Visualization

Word Embedding Visualization - Word2vec is a method to efficiently create word embeddings and has been around since 2013. These representations are called word embeddings. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. But in addition to its utility as a word. Word embeddings map words in a. A typical embedding might use a 300 dimensional space, so each word would be represented by 300.

But in addition to its utility as a word. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. Word embeddings map words in a. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. These representations are called word embeddings. Word2vec is a method to efficiently create word embeddings and has been around since 2013.

A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word embeddings map words in a. Word2vec is a method to efficiently create word embeddings and has been around since 2013. These representations are called word embeddings. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. But in addition to its utility as a word.

78 Word Embedding Visualization

Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word2vec is a method to efficiently create word embeddings and has been around since 2013. But in addition to its utility as a.

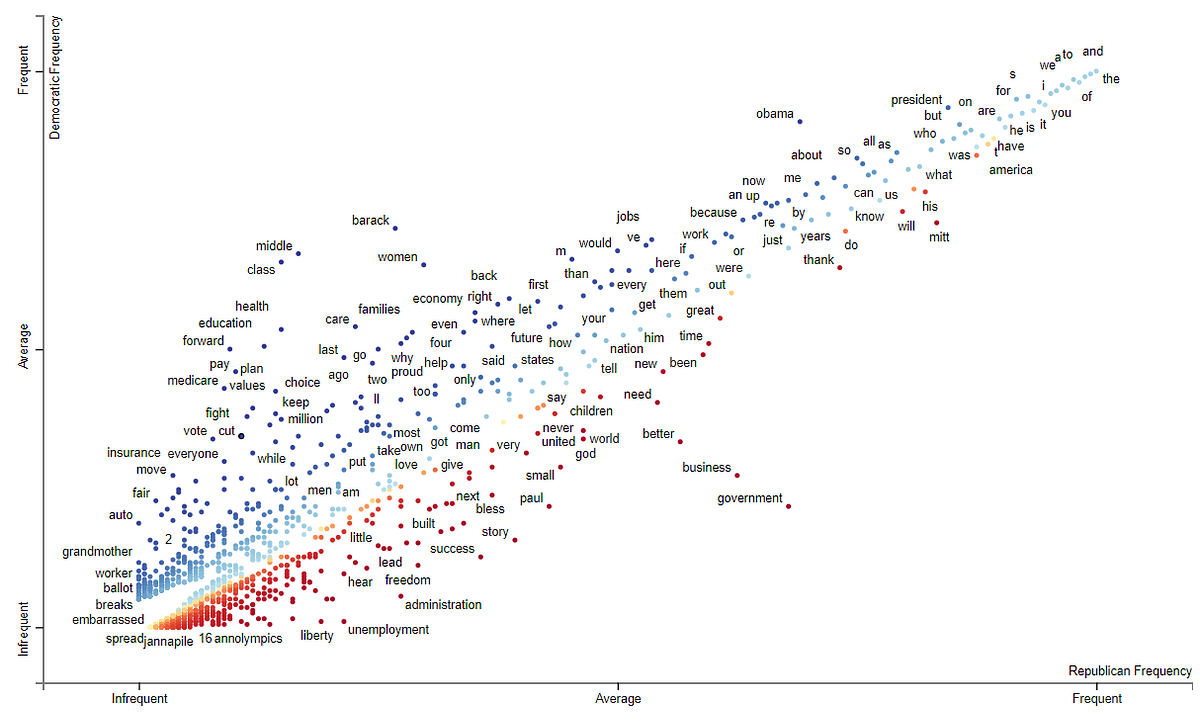

Visualization of the word embedding space Download Scientific Diagram

These representations are called word embeddings. Word embeddings map words in a. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. But in addition to its utility as a word. Word2vec is a method to efficiently create word embeddings and has been around since 2013.

The Ultimate Guide to Word Embeddings

Word embeddings map words in a. These representations are called word embeddings. But in addition to its utility as a word. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word2vec is a method to efficiently create word embeddings and has been around since 2013.

Word Embedding Guide]

But in addition to its utility as a word. Word embeddings map words in a. These representations are called word embeddings. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word2vec is a method to efficiently create word embeddings and has been around since 2013.

Word Embeddings for NLP. Understanding word embeddings and their… by

But in addition to its utility as a word. Word embeddings map words in a. Word2vec is a method to efficiently create word embeddings and has been around since 2013. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. These representations are called word embeddings.

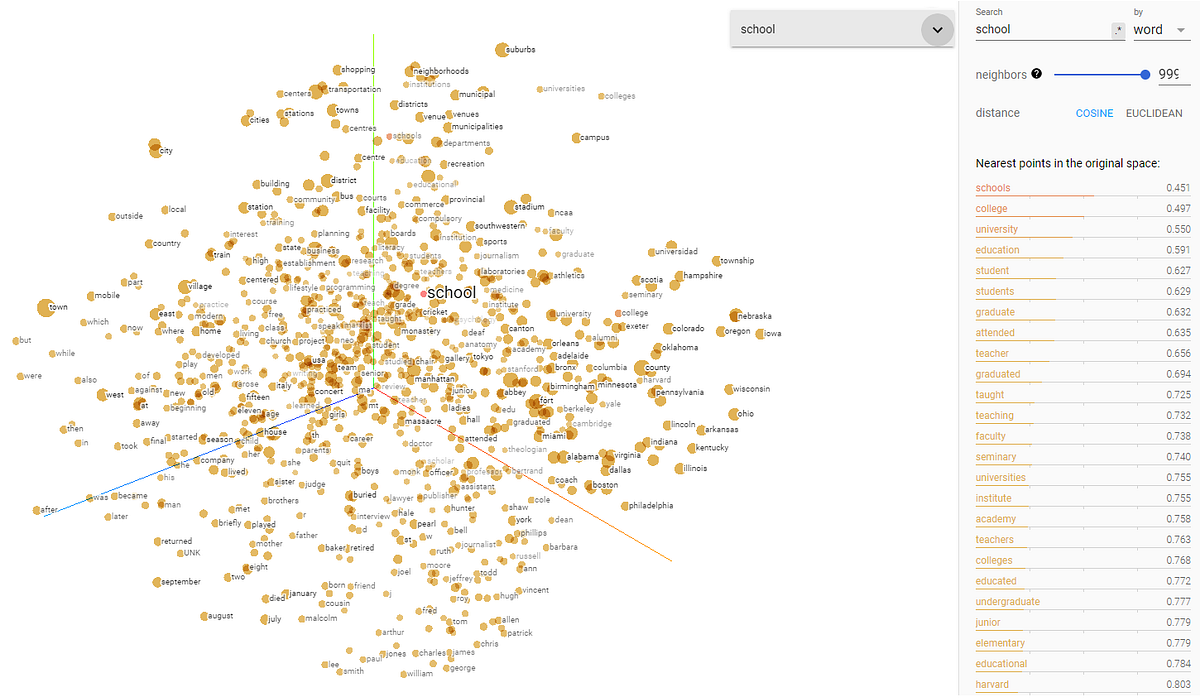

Visualizing your own word embeddings using Tensorflow by aakash

Word embeddings map words in a. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. But in addition to its utility as a word. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. These representations are called word embeddings.

Word Embeddings for PyTorch Text Classification Networks

But in addition to its utility as a word. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. These representations are called word embeddings. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. Word2vec is a method to efficiently create word.

Word and document embedding visualization. Download Scientific Diagram

But in addition to its utility as a word. Word2vec is a method to efficiently create word embeddings and has been around since 2013. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,. A typical embedding might use a 300 dimensional space, so each word would be represented by.

Most Popular Word Embedding Techniques In NLP

But in addition to its utility as a word. Word embeddings map words in a. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word2vec is a method to efficiently create word embeddings and has been around since 2013. These representations are called word embeddings.

78 Word Embedding Visualization

But in addition to its utility as a word. These representations are called word embeddings. A typical embedding might use a 300 dimensional space, so each word would be represented by 300. Word embeddings map words in a. Word embedding visualization allows you to explore huge graphs of word dependencies as captured by different embedding algorithms (word2vec, glove,.

Word Embeddings Map Words In A.

A typical embedding might use a 300 dimensional space, so each word would be represented by 300. But in addition to its utility as a word. Word2vec is a method to efficiently create word embeddings and has been around since 2013. These representations are called word embeddings.

![Word Embedding Guide]](https://iq.opengenus.org/content/images/2021/09/word-embedding-header.png)