Ols Matrix Form

Ols Matrix Form - (k × 1) vector c such that xc = 0. 1.2 mean squared error at each data point, using the coe cients results in some error of. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. That is, no column is. We present here the main ols algebraic and finite sample results in matrix form: The design matrix is the matrix of predictors/covariates in a regression:

That is, no column is. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. (k × 1) vector c such that xc = 0. The matrix x is sometimes called the design matrix. The design matrix is the matrix of predictors/covariates in a regression: For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. 1.2 mean squared error at each data point, using the coe cients results in some error of. We present here the main ols algebraic and finite sample results in matrix form:

That is, no column is. The design matrix is the matrix of predictors/covariates in a regression: (k × 1) vector c such that xc = 0. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. 1.2 mean squared error at each data point, using the coe cients results in some error of.

SOLUTION Ols matrix form Studypool

The design matrix is the matrix of predictors/covariates in a regression: We present here the main ols algebraic and finite sample results in matrix form: 1.2 mean squared error at each data point, using the coe cients results in some error of. That is, no column is. (k × 1) vector c such that xc = 0.

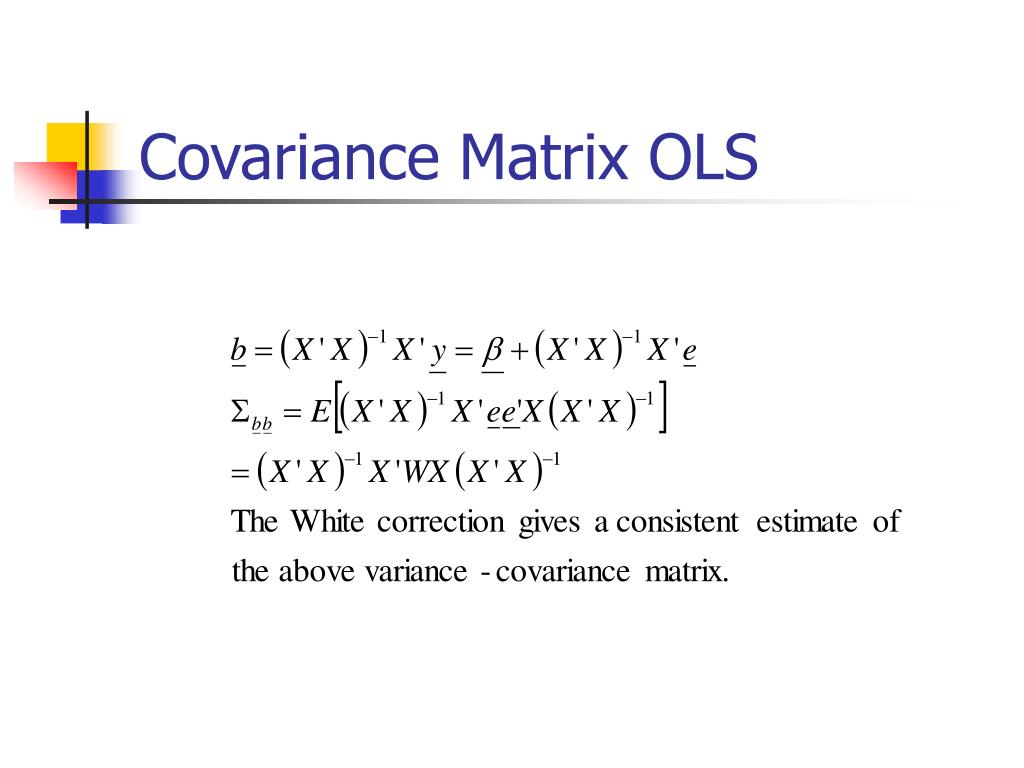

Linear Regression with OLS Heteroskedasticity and Autocorrelation by

That is, no column is. (k × 1) vector c such that xc = 0. The matrix x is sometimes called the design matrix. 1.2 mean squared error at each data point, using the coe cients results in some error of. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &.

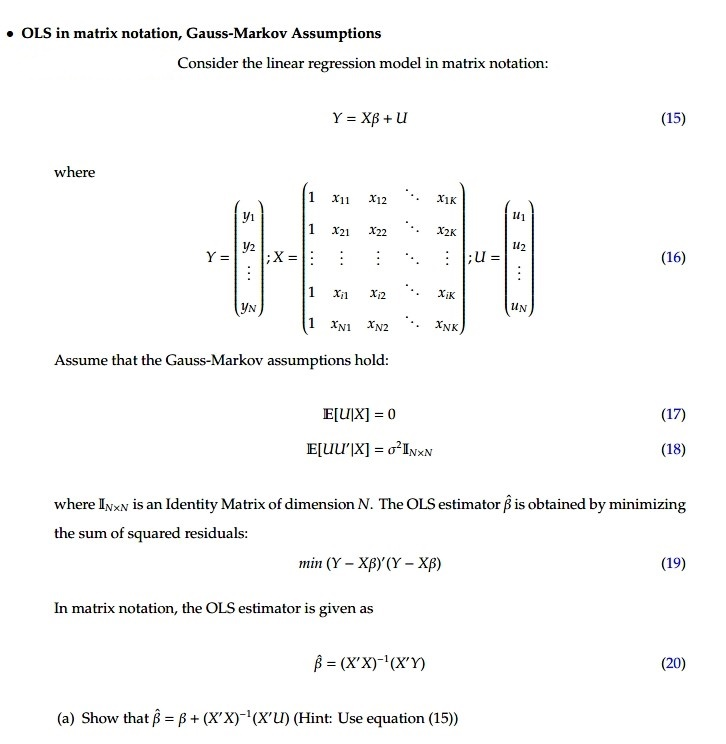

Solved OLS in matrix notation, GaussMarkov Assumptions

The design matrix is the matrix of predictors/covariates in a regression: \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix. We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations),.

SOLUTION Ols matrix form Studypool

(k × 1) vector c such that xc = 0. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. For vector x, x0x = sum of squares of the.

Ols in Matrix Form Ordinary Least Squares Matrix (Mathematics)

For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. (k × 1) vector c such that xc = 0. 1.2 mean squared error at each data point, using the coe cients results in some error of. We present here the.

Vectors and Matrices Differentiation Mastering Calculus for

That is, no column is. The matrix x is sometimes called the design matrix. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. We present here the main ols algebraic and finite sample results in matrix form: 1.2 mean squared error.

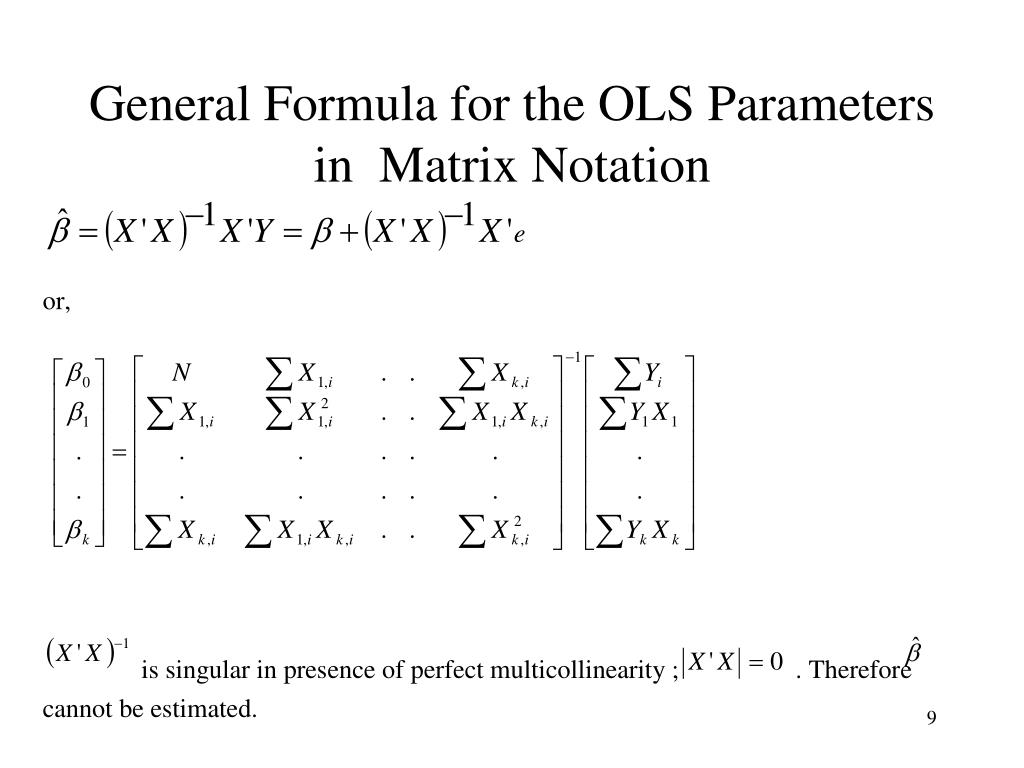

PPT Econometrics 1 PowerPoint Presentation, free download ID1274166

(k × 1) vector c such that xc = 0. 1.2 mean squared error at each data point, using the coe cients results in some error of. For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. The matrix x is.

OLS in Matrix Form YouTube

The matrix x is sometimes called the design matrix. The design matrix is the matrix of predictors/covariates in a regression: (k × 1) vector c such that xc = 0. We present here the main ols algebraic and finite sample results in matrix form: Where y and e are column vectors of length n (the number of observations), x is.

OLS in Matrix form sample question YouTube

We present here the main ols algebraic and finite sample results in matrix form: That is, no column is. Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. For vector x, x0x = sum of squares of the elements of x.

PPT Economics 310 PowerPoint Presentation, free download ID365091

(k × 1) vector c such that xc = 0. The design matrix is the matrix of predictors/covariates in a regression: For vector x, x0x = sum of squares of the elements of x (scalar) for vector x, xx0 = n ×n matrix with ijth element x ix j a. We present here the main ols algebraic and finite sample.

For Vector X, X0X = Sum Of Squares Of The Elements Of X (Scalar) For Vector X, Xx0 = N ×N Matrix With Ijth Element X Ix J A.

That is, no column is. 1.2 mean squared error at each data point, using the coe cients results in some error of. \[ x = \begin{bmatrix} 1 & x_{11} & x_{12} & \dots &. The matrix x is sometimes called the design matrix.

We Present Here The Main Ols Algebraic And Finite Sample Results In Matrix Form:

The design matrix is the matrix of predictors/covariates in a regression: Where y and e are column vectors of length n (the number of observations), x is a matrix of dimensions n by k (k is the number of. (k × 1) vector c such that xc = 0.